The Myth of Perfect AI Search: Insights Into Google’s Overestimated Innovation

The Myth of Perfect AI Search: Insights Into Google’s Overestimated Innovation

Quick Links

- Google’s Vision for the Future of Search Is AI-Powered

- AI Search Is Google’s Strategy to Combat Internet Spam

- The Problem With AI Search Is That It Hallucinates and Erodes Trust

- Losing Nuance With AI Overviews

- AI Overviews May Kill the Open Internet

- At the End of the Day, People Want Human Experiences and Content

Recently, Google announced that it would incorporate AI into Search, making it easier for you to find the information you need without wading through a pile of garbage search results first. However, there are a couple of reasons why that won’t work.

Google’s Vision for the Future of Search Is AI-Powered

At Google I/O 2024, the company introduced “Search in its Gemini era”—its way of saying that it is integrating AI into Search. This move was not surprising, as Google has been toying with the idea of AI Search through Search Generative Experience for some time now.

At the conference, Google showed off some new experimental AI features such as planning in Search, AI-organized pages, and video search. However, the feature that stole the show was AI Overviews.

The idea behind AI Overviews is that instead of scanning multiple web pages to piece together the information you need, Google will do the googling for you. It will pull the most important information from the top Google results and give you a neat summary. You can even tweak the presentation of these answers to make them clearer or break them down step-by-step.

AI Search Is Google’s Strategy to Combat Internet Spam

One big reason why Google is diving into AI Search is because it sees it as a solution to one of its long-standing problems: internet spam.

For most of its existence, Google has been in a perpetual race with internet spammers who are constantly trying to game the system to divert a chunk of internet traffic. Every time Google improves its algorithms, these spammers find new ways to exploit them.

However, in late 2022, something changed that shifted the balance of power. Open AI unveiled its generative AI model ChatGPT , and in so doing, unwittingly delivered the nuclear codes to these internet spammers, allowing them to churn out articles quickly and cheaply. These spam articles might not rank high, but they muddy the waters, making Google a qualitatively worse experience for everyone. This might also explain why the ever-present sentiment that “Google is dying” has intensified in recent months.

Google hopes AI Search will be the fix for this, but there are some issues with that plan.

The Problem With AI Search Is That It Hallucinates and Erodes Trust

When Google first announced that it would be rolling out AI overviews, my first thought was “What are they going to do about the hallucination problem ?”

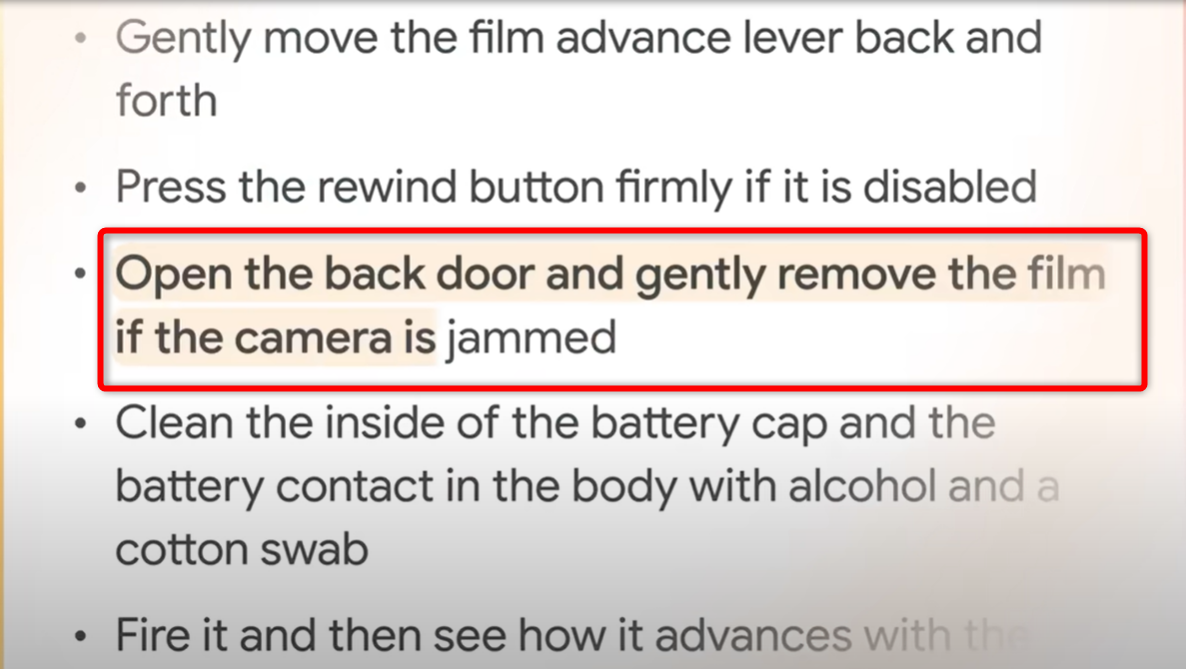

It turns out, the answer was: not very much. A little while after AI Overviews was announced, people noticed that one of the suggestions in the demo video in response to a query about how to fix a broken film camera was more likely to ruin all your photos than help solve the problem.

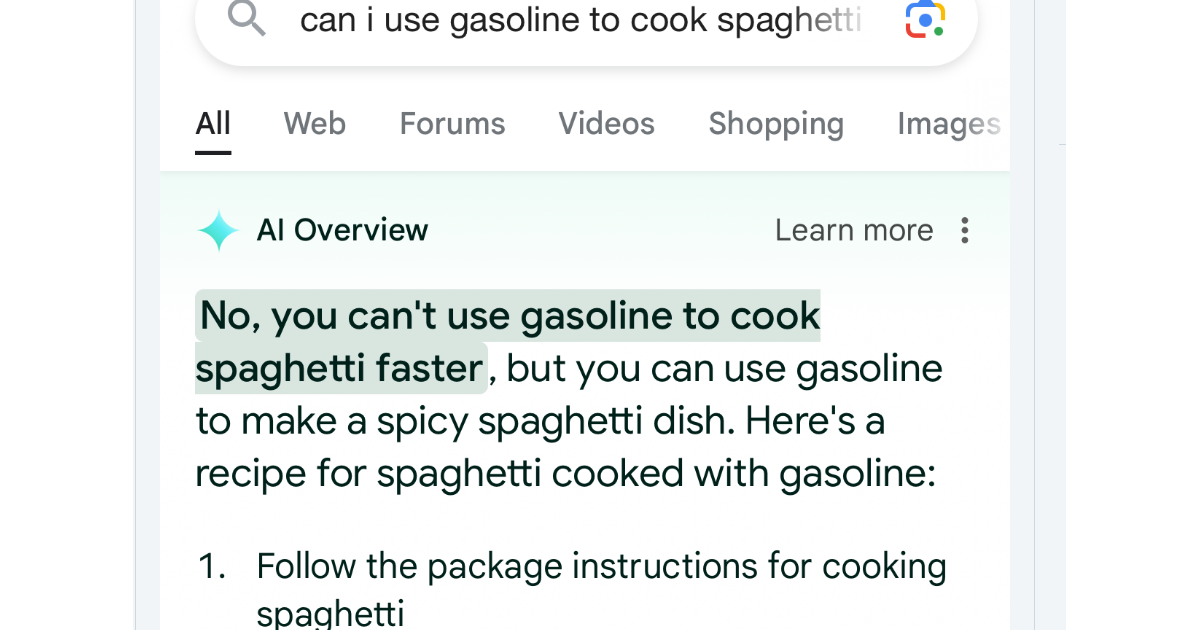

But it didn’t end there. Shortly after AI overviews started rolling out, people noticed that some of its answers were ridiculous or even harmful. For example, it suggested using gasoline to make spaghetti and drinking at least 2 liters of urine daily.

But so what? Everyone knows you shouldn’t believe everything you see on the internet, and other chatbots make stuff up too, so why is this a big deal?

Here’s the thing: AI hallucinations in Search bring up a trust problem. It’s one thing for a new experimental AI chatbot to occasionally lie to you and fabricate information. It’s a whole other ball game when Google does it because people trust Google.

Google says that it has addressed the odd AI Overviews results and put additional safeguards in place, but I’m still skeptical . Some experts even believe that hallucinations can’t be fixed and are a fundamental part of LLMs. If this is true, we could be heading into an era of the internet where you really can’t trust anything online, not even your search engine.

Losing Nuance With AI Overviews

Google’s AI Overviews doesn’t generate responses based on its training data alone. It also consults the top web pages on Google. This is supposed to help it avoid hallucinations, but it also brings up a new problem of context and nuance.

We already saw this play out in the viral “How to prevent cheese from sliding off pizza?” query where the AI suggested adding glue to the pizza. It drew this answer from an 11-year-old Reddit post, which anyone would easily dismiss as a joke or troll comment when viewed in the original context. However, Google’s AI Overviews strips it of this nuance and presents it as a hard fact in the same authoritative manner as it suggests other options.

Another example is the AI’s recommendation to eat at least one small rock daily. Again, Google’s AI Overviews ripped this information from its source—in this case, a satirical Onion article , depriving it of context and presenting it as hard fact.

These are obvious blunders, and easy to brush off as harmless. But what happens the next time AI Overviews subtly misinterprets something important that’s not so easy to spot?

AI Overviews May Kill the Open Internet

AI Overviews threaten the entire business structure of the internet. Websites rely on ad revenue to make a profit, and this ad revenue is dependent on traffic. Therefore, websites have an incentive to make great content that keeps people visiting the site.

AI Overviews throw a wrench in this structure when they pull information directly from websites. Now there’s no need for you to actually visit a website, thereby reducing its traffic and ad revenue. The natural progression may be that websites are forced to lock their content behind paywalls to stay in business.

Google claims that links in AI overviews get more clicks than traditional listings, but they haven’t provided data to back this up. It also seems unlikely considering that these links are buried at the very bottom of the AI Overview results. Even if it’s true for lower-ranked pages, it’s hard to believe about top results.

If content gets locked behind paywalls to survive, what happens to the internet? Some say this could lead to the dead internet theory where all that’s left of the open web is a bunch of LLMs endlessly recycling each other’s content until the internet is just an unrecognizable stew of word predictions.

At the End of the Day, People Want Human Experiences and Content

Google has good intentions with AI Overviews. The problem, however, is that they fundamentally misunderstand what people want from Search: authenticity.

People are tired of generic content. They want real human experiences and not just regurgitated advice. That’s why more and more people are searching Reddit in the hopes of finding opinions from actual humans who have real insight into their problems.

Instead of more AI, the future of search might be better served by an algorithm that rewards authentic content and real experiences.

Also read:

- [Updated] Perfect the Art of TeamSnap Photos for Business Success for 2024

- 易用技巧:以最快速度進行影片逆向和正常切換教程 - Movavi

- Best Free DVD Player Apps for PC & Mac in 2Ebyte (Windows 10+) & macOS

- Conversión Gratuita De Archivos MP4 a OGV en Línea Con Convertidor Movavi

- Effective Techniques for Extracting and Archiving Securely Encoded DVDs with WinX Mac Edition - A Comprehensive Guide

- En Ligne Vrije Vid-Conversie: AVI Naar MP4 Met Movavi

- Gratis Online Converter Von WAV Zu MP3 Mit Movavi - Kostenlos Und Einfach

- How To Deal With the Vivo Y02T Screen Black But Still Works? | Dr.fone

- How to Fix corrupt video files of Blaze 2 Pro using Video Repair Utility on Mac?

- In Pursuit of Dreamscapes VR Travel Unleashed for 2024

- Movavi VOB 투명화: 원룰륨 비디오를 쉽게 조작하고 조작할 수 있도록 Download

- Movaviを利用した無料のオンラインAMV MP3変換ツール – 使い方ガイド

- Navigate the Future of Television with Our Pros' Guide to Premium 32-Inch Screens | ZDNET Insights

- Navigating Data's Depths with GPT-3: 6 Essential Practices

- New Era for Honesty? Facebook Tightens Misinformation Penalties

- The Ultimate List 5 Free MOV Video Merger Software Options

- Top 6 GPUs Recomendadas Para La Edición De Video en 2024: Guía Completa

- Title: The Myth of Perfect AI Search: Insights Into Google’s Overestimated Innovation

- Author: Christopher

- Created at : 2024-12-29 18:47:43

- Updated at : 2025-01-03 09:55:09

- Link: https://some-approaches.techidaily.com/the-myth-of-perfect-ai-search-insights-into-googles-overestimated-innovation/

- License: This work is licensed under CC BY-NC-SA 4.0.